A computer history deprogrammer

On Hacker News is this story on the “History of Computers”. It seems to be the sort of thing written by ChatGPT summarizing the consensus of what the everyone believes about computer history — which is to say, the wrong things. This document presents an alternate history.

Aristotle was wrong about everything

Aristotle was revered as the source of all knowledge for 2000 years, but it turns out, he was wrong about most everything. Well, not so much wrong as primitive. Every new advance had to be reconciled with with Aristotle’s teaching, pretending Aristotle said something different, or couching the new advancement in terms that didn’t conflict with Aristotle.

For example, when Galileo and Copernicus pushed the heliocentric model, much of the fight was with Aristotle’s teaching that the universe revolved around the Earth. Aristotle was the scientific consensus of the time. People pretend the conflict was mostly with the Church, but the reality is that fellow scientists of the time thought Galileo was a jerk for trashing the teachings of Aristotle in all areas, not just astronomy.

As Bertrand Russell puts it “almost every serious intellectual advance has had to begin with an attack on some Aristotelian doctrine”. Our discussions today are a conflict between those who believe Aristotle is trash, and devotees that try to pretend he’s important.

As for logic, Aristotle has his famous syllogisms. But there is a competing Stoic logic from that time with a different form of syllogisms. They were different, competing systems, but you could pretend either was the basis for modern logic. In other words, neither were — it’s just a pretense, where you tie together similar things from history.

George Boole

The same is true with Boolean logic — it’s not actually the inspiration of modern binary logic.

It’s actually Claud Shannon who is the father of binary logic. Computers were inherently binary, working from switches that were on or off. He worked backwards to create an entire mathematical theory. Shannon credits Boole with having some useful ideas, but it’s absurd that Boole had any influence on the development of computers.

The point is that the history of computer logic starts with the likes of Shannon. It’s just a pretense trying to pretend they were just extending Aristotelean or Boolean logic.

Steampunk Computing …. and Networking

Electromechanical devices were invented around 1822, with electric motors, gears, solenoids, switches, and so forth. They would continue up to the WW II. Charles Babbage attempted to build the first computing device around 1840, and the last was the Harvard Mark I built around 1940 for the war effort (including atom bomb calculations).

Babbage’s work is given far too much credit. It’s like crediting sci-fi authors for dreaming up things that later scientists/engineers invented. His work didn’t influence future developments. Likewise, while Ada Lovelace had brilliant ideas, it’s likewise a stretch pretending she created the first software program. We pretend a few notes written in the margins were more important than they actually were.

The Jacquard Loom certainly deserves credit here for coming up with punch cards — inventing software before there was a computer. Punch cards, and later punched tape, would continue to be a major feature of computing until the 1980s.

The thing missing from the steampunk history is networking. Morse Code (1830s) was analog telegraphy, digital telegraphy wouldn’t arrive until around 1880 with Baudot Code. This was a 5-bit system using all capital letters that later evolved into 7-bit ASCII (1960) and the variable-bit UTF-8 used today.

When Paul Baran envisioned an internetwork in the 1960s, it was based upon the way messages were received and forwarded on the telegraph office. A message would arrive on one link to an office and be punched into tape. Operators would examine the destination address, then feed the tape into a reader to transmit out another link.

This steampunk history needs to include the Enigma machine. The history of encryption is divided into two periods, the one when it was done by hand, and the other when it was done by machines. Today, it’s inconceivable that encryption would be done any other way. But with the Engima and similar machines, this was a surprising innovation.

The steampunk description of tabulating machines is pretty accurate. However, note the shift from program to data. Jacquard’s original punch cards contained the program for a loom, where Hollerith’s census cards contained data.

The story of IBM is the story of how business computing was on a separate track from everything else, which continues to this day. An IBM tabulating machine had little in common with at telegraph, which likewise had little to do with a fire control computer within a missile carrier. Today, IBM mainframes continue to be a $40 billion per year business that is out of touch with the rest of the world.

Vacuum tubes

Much is made of vacuum tubes, but they were scarcely. a blip on the radar between the 100 years of electromechanical computing and 100 years of transistor based computers. The ENIAC was the first vacuum tube computer in 1945 and the first transistor computer, TRADIC, appeared in 1954. They were meaningful for scarcely a decade.

Turing, von Neuman, Shannon

We have a fetish for giving all the credit to famous people while ignoring the contributions of everyone else. I’d rank Claude Shannon as probably the most important inventor of computer science, but he’s given only a footnote if mentioned at all in history documents.

These people had brilliant ideas, as happens when a field is new and full of discovery, but the quality of their work largely comes from others who built upon their ideas later. Any half-baked idea one of these people have is credited as founding an entire subfield in computer science — not because of their genius, but of those who came later.

General purpose computers and users

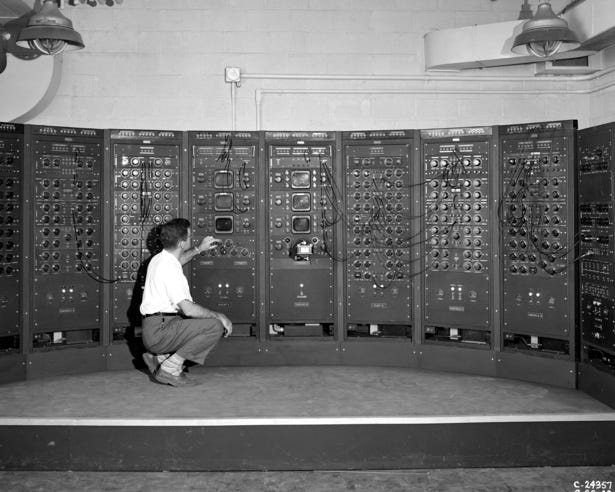

Most histories focus on the inflection point when computers got flexible programs, such as the ENIAC. Prior to that, computers were “programmed” by moving wires around so that they’d do different calculations.

A more import inflection point was when computers had interactive users, where a human being would do something and a computer would respond.

Until the late 1960s, when you saw a computer, you saw panels of switches and blinking lights. You didn’t really see a way that humans could interact with them.

At most, humans would interact with batch jobs. They would give punch cards, punched tape, and magnetic tape to an operator that would startup the machine and feed in the program and data. The computer would run the job, and produce output, including the cards and tape as well as printed items. And computer operator of the early 1960s spent most of their time working with such things not attached to a computer.

At some point, people figured out that they could hook up telegraph (teletypewriter) machines to a computer and interact. At first, they used the Baudot system, which is why a lot of early computers had only capital letters. Then in 1960, things swifty converted to ASCII and the famous Teletype Model 33.

One side note is that ASCII is a protocol, it includes commands as well as data. The first 26 bytes in ASCII have various actions associated with them. For example, the BEL (0x07) tells the teletype to ring a bell. Going the other direction, XOFF (0x13) tells the sender to pause for a moment so the recipient can catch up. Everybody who uses the command-line is familiar with this protocol, typing <ctrl-S> when they want the window to stop scrolling.

Such interaction was expensive. Each incoming character caused an interrupt on the CPU, causing it to stop whatever it was doing to spend hundreds of cycles to handle the input. Even when it was possible, people still preferred batch jobs, because they couldn’t afford to the expense of the CPU cycles handling their keystrokes.

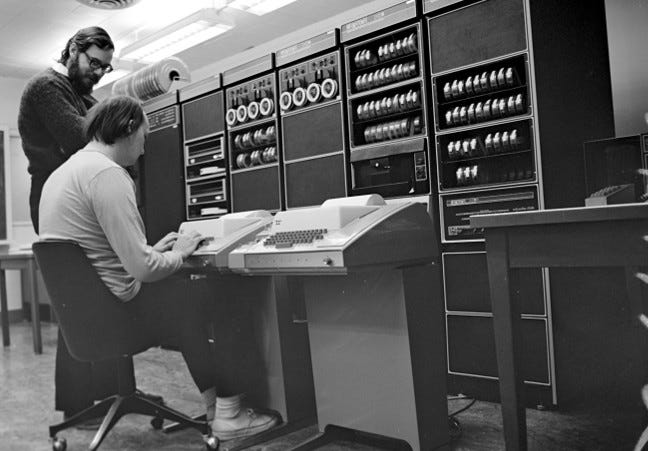

There is a famous picture of Brian Kernighan and Denis Ritchie in front of a PDP-7 using a Teletype Model 33. These are two of the creators of modern computing (C and Unix), but you can see here that they are using steampunk teletypes to interact with the computer. Notice how the teletype isn’t in their office — they have to go to the computer room to use them.

Douglas Englebert’s Mother of All Demos (1968) is what really cemented the idea of an interactive computer. It wouldn’t be until the Macintosh (1984) that it come to fruition, but he imagined then what computers would look like today.

In short, interactive computers is really the point that computers become general purpose. Before this, programming a computer for a purpose was out of reach of most people.

Transistor

People point to the fact that the transistor (1947) is smaller and faster as the compelling factors, but in reality, it’s because it’s reliable. Electromechanical devices and vacuum tubes were so unreliable that they required maintenance after every compute job. Transistors would run for decades without wearing out. We have a name for this today, solid state. We still have the last remnant of electromechanical computing today with rotating disk hard-drives that people are slowly replacing with solid-state drives or SSDs.

One of the most important developments was the T-carrier system that started to appear around 1962. This digitized the telephone network. The telephone in the home was still analog, but would be converted to a 56-kbps stream that would then be forwarded through the network until it reached its destination, then be converted back to analog. This couldn’t happen unless it was reliable — it couldn’t work with vacuum tubes or electromechanical relays.

The Associate Press is a wire service that transmits news articles around the world. Through the 1980s, a significant percentage of their employees were members of the machinist union whose job it was to maintain the electromechanical telegraph devices.

Moore’s Law was coined in 1965 predicting that transistors would keep shrinking at a predictable rate. It’s probably the most important concept in the history of computing. Everything that happened since was just the natural outgrowth of Moore’s Law, the time at which certain things became possible.

For example, 8-bit microprocessors appeared at the point in history when it would be possible to fit that much power into a single chip. The same is true for 16-bit then 32-bit processors.

Steve Jobs deserves a lot of credit for creating the modern mobile device, but at the same time, it’s just a mater of Moore’s Law. The year 2007 was when such devices were possible because of Moore’s Law. Such a device wasn’t practical in 2005, and everyone would be doing it by 2010 regardless of what Job’s decided.

Programming languages

The history of programming languages is broken. There are two forms. One type of language is a concrete list of commands. Another is an abstract formula.

The concrete languages are things like macro assemblers, FORTRAN, COBOL, and BASIC.

The abstract languages are things like Algol which spawned most of the languages we have today, such as C, JavaScript, Python, and pretty much every language you’ve learned. There’s also LISP, which spawned even more abstract languages, and influenced many of todays languages.

Back around 1960, when these things were created, computers had a few kilobytes of memory and a few megabytes of storage on rotating media. You’d create a program in a language like FORTRAN by punching cards. To edit the program you’d insert or replace cards in the stack. You’d submit a job to compiler it, and it’d come back the next day as perhaps a magnetic tape containing the machine code.

The more abstract languages like Algol don’t really shine until you have more powerful computers to handle them. In the early days of programming, a couple hundred lines of code was a big program. They were more theoretical in the early days than actually used.

The following pictures shows NASA employee Margret Hamilton with what people claim is the source code for the Apollo rockets. But those rockets had only 64-kilobytes worth of machine code. It’s hard to imagine that the source code could be that big. I suspect that only of those binders contain the source code and all the rest contain the various requirements and calculations used to create the code.

It’s not until the invention of the C programming language that computer programming languages get interesting. I’ll get more into that below.

Networks

That discussion of networks is woefully inadequate.

Until around 1970, a link was almost always between a computer and a dumb device. Quite apart from business computing to crunch numbers, computers were used as control systems, like the Apollo guidance computer. Computers had receive input and send commands to hardware that had no processor.

This included computer-to-human communications. A good example is the wire services that send news stories through a telegraph network, controlled by computers (like the PDP-8).

Having two computers on either end of a link, exchanging data that only computers wanted to see, was an extraordinary rarity until around 1970s.

The invention that changed things was the 8-bit microcontroller and Intel RAM chips. This allowed any dumb device to be cheaply upgraded to a smart device.

Around 1975 we saw the birth of IBM’s SDLC protocol, which allowed two smart computers to talk to each other across a point-to-point link in ways that were impossible for dumb devices. They sent packets, with checksums, and would retransmit lost packets.

But it was only a single link. The next invention was then to the capability of relaying a packet coming in from one link out another link, from link-to-link, until it reached it’s destination. From this, you could build a network, which IBM did. It was centered on its mainframe, but could consist of a lot of different devices that could forward traffic to/from the mainframe.

At the same time, the telecoms got into the business. Back then, they were government monopolies in charge of Post, Telegraph, and Telecommunications (PTTs). Their backbones were already digitized with the T-carrier system, so they could lease digital “circuits” to customers. Around 1976 they created a packet switching system called X.25 that was better suited to computers — a circuit for packets instead of bits. Traditional phone circuits were designed for a steady stream of bits, which is what you want for voice phone calls. Packet switching was better suited for computers, which were bursty, quiet much of the time with no data to send, following by a burst of many packets.

In 1977 official standards organizations got together to merge the two types of networking, which largely overlapped, to create what would be known as OSI or Open Systems Interconnect.

That effort went nowhere. Nobody wanted the phone company’s version of packet circuits that operated like phone calls. Nobody wanted the mainframe networks designed by IBM (except IBM customers).

Instead, there were two other radically different types of networks invented, Ethernet (by Xerox) and TCP/IP Internet (by academics sponsored by the DoD). These completely broke the OSI model, and would become the basis of current networks.

In much like how people pretend Aristotle is still relevant, people pretend OSI is still relevant. It’s extremely frustrating as a techy talking to other supposed “experts” in my field who still think that the Internet works like old mainframes, such as having “layers”.

In the 1980s, there were a bunch of different network stacks like the Internet’s TCP/IP, but eventually, everyone settled on the Internet as the standard for the global network. Likewise, there were a lot of local interconnection technologies but eventually everyone settled on Ethernet as the standard. WiFi is essentially just an extension of Ethernet.

Internet

The Internet was turned on January 1, 1983.

People pretend it goes back further than that, to the ARPANET (1969). It’s not true, the ARPANET was a vastly different technology. Again, there’s the Aristotle angle, people pretend things here that really are useless.

ARPANET was afflicted with the same limited thinking of most communications, that we were talking to either dumb devices or humans. It most mostly based on the concept of a teletype. It had much in common with IBM’s mainframes, telecom’s X.25, and the OSI model of networking.

But the early 1970s saw the birth of alternatives, such as Xerox PUP/XNS and CYCLADES. Around 1978, the old TCP protocol was split into halves, an underlying Internet protocol with the TCP running on top. Apps on top of TCP would remain the same, like Telnet, FTP, and SMTP. But underneath, the Internet protocol was a radically different style of networking.

We saw the protocol published in 1981 with RFC 791, the additional of the protocol to BSD Unix in 1982, and “flag” day of in 1983 when all the old ARPANET nodes were turned off and Internet nodes turned on.

The 1983 Internet was just an internal network for DoD researchers. But others wanted access, so a separate CSNET was created for them. The DoD network and CSNET would exchange TCP/IP packets, but where technically different networks. It’s more complicated than that. In the beginning, the network wasn’t thought of as just TCP/IP, but gateways that could exchange things such as email and files. Slowly it became just TCP/IP.

Other networks then connected to this growing network. Instead of gateways that converted one type of networking to another, everyone moved to TCP/IP, connected with simple links and routers. The NSFnet shouldered the burden as being the backbone from 1985 to 1995.

The important point is that the individual networks were built to interconnect themselves, with connectivity to the rest of the Internet only as an optional feature. This feature wasn’t heavily used, because it would require somebody to invest in fast links. You could remotely login with Telnet or exchange small email messages with SMTP, but trying to use FTP to transfer large files was often a problem if it went from one network to another. The NSFnet was widely abused for this, which is why the government eventually got out of the business.

Then the web was invented, and everything changed.

Personal computers

There is a standard history of computers evolving from 32-bit mainframes (mostly IBM) to 16-bit minicomputers (mostly DEC) to 8-bit microcomputers (Apple, PET, TRS-80) in the late 1970s. A mainframe would take up an entire room. A minicomputer would take up a cabinet. A microcomputer could fit on the desktop.

It’s the story I was raised on and I don’t believe it.

For one thing, I think IBM was probably the largest vendor of 8-bit microcomputers and 16-bit minicomputers in the 1970s. They weren’t in competition to the mainframe, but worked with it. The mainframe offloaded tasks onto the smaller computers as appropriate.

Most importantly, the first 8-bit microprocessor was invented to be a terminal (Datapoint 2200) attached to a more powerful computer. IBM continued this by creating every smarter terminals in its IBM 3270 line of terminals. Whereas banks used punched cards and teletypes in the past, now they ran powerful applications with such terminals, drawing text on the screen. Their keystrokes would be buffered and sent to 16-bit minicomputers before forwarded onto the central 32-bit mainframe, making the process efficient.

IBM thus evolved right along with Moore’s Law. It wasn’t micro/minis instead of mainframes, but included with mainframes.

I think instead the thing that broke IBM’s mainframe model was the network. Instead of thinking of the IBM PC has an adjunct to the mainframe network (as IBM intended) people realized that if they had a network, they didn’t need anything at the center.

For example, there might an email server on the network. Communication to the email server could happen directly, instead of passing through the mainframe. Likewise, computers could store files on a file server without a mainframe.

One of the under-appreciated features of networking is file locking. Instead of a single mainframe running the app, it could be distributed among all the PCs doing their own computations, and then using locks to commit them to a central file that everyone could read. Locks prevented to PCs from interfering with each other when trying to change the same value. Programmers built networked/distributed applications that worked like they would on a mainframe, but without having a mainframe, and without having to understand networks.

However it happened, the office networks of the 1980s made a central mainframe obsolete.

The [C, Unix, 68k/RISC] tuple

The thing that really defeated old-style computing wasn’t the 8-bit desktops like Commodore 64 or the 16-bit IBM PC, but 32-bit computing based on Unix.

These things all combine together. You can’t think of them separately.

Up to this point, every new CPU architecture needed a new custom assembly language and new custom operating system.

But then came C and Unix. The C programming language was cleverly designed as a high-level assembly language. That meant system software could be written in C then ported to any CPU architecture.

Unix was that operating system. It was soon up and running on PDP-11, VAXes, Motorola 68000, and even the x86.

Early RISC processors (MIPS, SPARC, PA-RISC) would probably not have succeeded if programmers were required to learn their custom assembly languages. It would take years to create operating-systems. They succeeded because they would quickly modify a C compiler to spit out their machine code, then quickly port Unix to run on their system. Not only did that give them a programming language and operating-system for free, it also gave them the growing based of applications running on Unix.

But RISC didn’t really appear until the end of the 1980s, with SPARC, MIPS, ARM, and PA-RISC appearing in 1986. Before that, the Unix market was driven by Motorola’s 68000.

Motorola shipped the 68000 (68k) CPU in 1979. Technically, it was a 16-bit processor like Intel’s competing 8086, but it made the radical decision to have 32-bit registers. This allowed computers and software (like Unix) to be designed like 32-bit software even if it only ran at the speeds of a 16-bit processor.

The biggest concern was memory. Bit computers needed lots of memory, including things like virtual memory.

The Sun-1 from 1982, and it’s competitors, changed everything. Suddenly, every university department had a micro-mini-mainframe computer, a 68000 running Unix and to which they could connect cheap 8-bit terminals.

Everyone wanted a power VAX computer instead, but they were satisfied with a cheap 68k computer running Unix instead. In the late 1980s, when RISC appeared, they were faster than the VAX while costing roughly the same as the 68k, and the age of mainframes/minis being the “fast” computer was over. Now, “fast” meant the computers running on the edge of the network, the computers in the center were “slow”.

The point is that all of these things happened together. Early Unix probably wouldn’t have survived had it not been for customers wanting to run software on many CPUs. Many CPUs wouldn’t have happened with a common operating-system to run. None of this would’ve happened with a systems language like C.

I’m tempted to add the TCP/IP Internet to this list of inter-related things. The Sun-1 included a TCP/IP stack. It meant now every university department had access to a cheap “real” computer with full networking to interconnect to every other. When the Morris Worm happened in 1988, infecting primarily Sun computers, probably half of all computers on the Internet were Sun.

The roaring 80s

For nerds, the exciting thing about the 1980s is that each cycle of Moore’s Law, the entire industry would change. It’s hard to appreciate the that now. Todays computers in 2023 are little changed from those of 2013 — indeed, a lot of people are still using the older computers because they an upgrade would provide little benefit. The only real upgrade older computers need is to swap out the electromechanical spinning disk with an SSD.

The above history misses the major thing you need to know about the 1980s: the rise of the office network.

In 1980s, the typical office had no computing device on their desktop. There might be a terminal here and there, but rarely on a person’s desktop. A person would have to move to a different desk, competing with other employees for time.

By the end of the 1980s, an office worker’s desktop was assumed to have a computer on it.

We see the Internet as ubiquitous today, but it was rare for office computers to be running a TCP/IP stack. They instead ran an office-oriented protocol suite, probably based on Xerox’s XNS, like NetWare. It was NetWare that defined the office network, not TCP/IP. And you still had mainframes hulking in backrooms. If you walked by an IBM PC in a bank, it would likely be running an IBM 3270 terminal emulator. It wasn’t until late 1990s that you expected office workers to have access to the Internet.

An office worker’s job vastly changed. For one thing, there was email. Until this point in history, officer workers never used it. This alone changed officer work.

Then there was word processing, writing a document instead of a typing on an electromechanical typewriter. This has implications up and down the technology stack, because if you are editing a document, you probably want to store it somewhere — such as a file server on the network.

Perhaps the craziest invention was the spreadsheet, with VisiCalc on the Apple ][ and then Lotus 1-2-3 on the IBM PC. People could conceive of email, just an electronic form of email. But spreadsheets were completely novel, an electronic version of nothing that really existed before (unless you pretend, as the Wikipedia article does). It’s sort of a continuation of the idea of tabulation from Hollerith cards in the 1880s. For the most part, that’s what spreadsheets do, taking tables of numbers and bringing them altogether.

The point here is that the we often define the evolution in terms of computers getting faster and smaller. This is just Moore’s Law, and not terribly interesting. It’s these other stages in evolution that become interesting, how officer workers didn’t have computers on their desks in 1980 and then had spreadsheets in 1990.

The web (1993)

In most histories, the invention of the web changed everything.

Maybe. But the NSFnet backbone traffic was already growing exponentially at this point, as everyone clamored to get on the Internet to exchange emails and files. After the web was created and started appearing on the front page of magazines and newspapers, this exponential growth simply continued at the same pace.

There was a sort of Moore’s Law rule for the Internet starting in 1983 that backbone traffic and routs would double every so often, with the rate rather constant. In much the same way that we consider technology as appearing at the appropriate point in Moore’s law, we saw the web appear at the natural point of growth of the Internet. It’s hard to see how exponential growth would’ve continued without the web.

I joined an Internet startup around this time as my first job out of college. My motivation was simply that exponential growth of NSFnet backbone traffic. The job listings in the newspaper were for old-style, business analysts. I wanted to be on the cutting edge for the future. I didn’t even consider any job that wasn’t Internet related.

Conclusion

The point of this article is to discuss history in terms other than what you’ll get with ChatGPT. History is malleable. There are the things that happened, then there’s the consensus view of what we agreed happened. That’s what ChatGPT will give you. Here, I hope to prove to you that I have value, independent ideas, that I can’t simply be replaced with ChatGPT.

That stack of documents next to Margaret Hamilton could easily be multiple iterations of the source code, the kind of thing where every month or so you print the whole source for reference. We were still doing that in the early 80s.

Also, I think she worked in the MIT Instrumentation Lab rather than directly for NASA.

Nice write up. I never noticed the connection between five bit characters and seven bit ascii.

This is a great article, thank you for the care with which you examined our history! I think we need to be careful when we say that a processor "is" n-bits, or even retire the phrase, as evinced by:

> Motorola shipped the 68000 (68k) CPU in 1979. Technically, it was a 16-bit processor like Intel’s competing 8086, but it made the radical decision to have 32-bit registers.

It sounds like although the instruction set operates on 32-bit words, the registers are 32 bits wide, and the address bus is 24 bits wide, because the data bus is 16 bits wide and the ALUs are 16 bits wide, the processor is described as "a 16-bit processor". But the 68008 has the same instruction set, with 20 address bits and 8 data bits, is that "an 8-bit processor"?